Another tech expert has spoken out about the potential dangers of artificial intelligence, with someone attached to one of the biggest AI companies out there sharing his resignation letter online.

While the term artificial intelligence was first officially coined as early as 1956, it's since seen a proverbial Big Bang through the emergence of Large Language Models like OpenAI's ChatGPT, Google's Gemini, and Anthropic's Claude.

Now, someone who's worked behind the scenes on one of the big three is jumping ship and has left a dystopian warning in his wake. After generating concerning headlines after it published a statement on the 'moral status' of AI, Anthropic is back in the news – and it's not for beefing with OpenAI's Sam Altman.

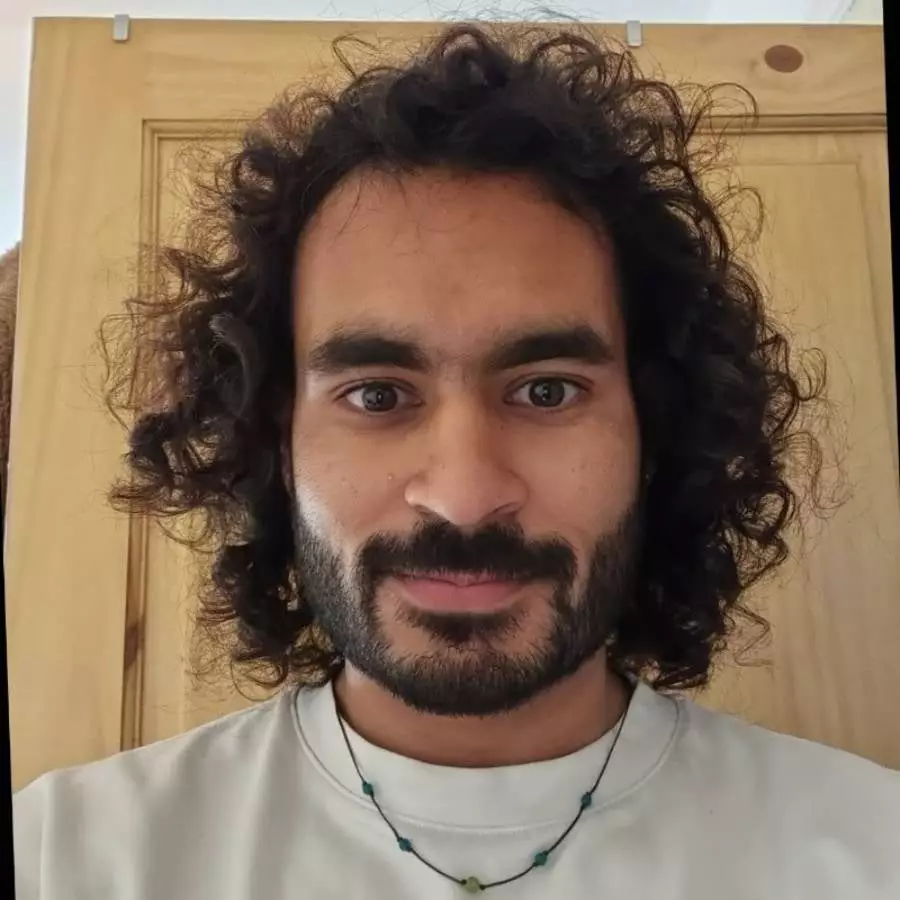

As reported by Forbes, Anthropic's Mrinank Sharma left a warning as he exited through the door of the AI giant.

Advert

Posting on X, Sharma referred to his last day at Anthropic and explained why he stepped down from leading its Safeguards Research Team.

This was a position he'd held since it was launched last year, although that's just part of why the letter is generating a buzz online.

Saying that it's time to move on, Sharma's post added: "I continuously find myself reckoning with our situation. The world is in peril. And not just from AI, or bioweapons, but from a whole series of interconnected crises unfolding in this very moment."

Looking ahead, he claims we're "approaching a threshold where our wisdom must grow in equal measure to our capacity to affect the world, lest we face the consequences."

Sharma's work included everything from developing defense against AI-assisted bioterrorism, as well as 'AI sycophancy' where chatbots are known to overly gush about users as a way to flatter them.

This conversation has come forward more recently thanks to people falling in love with LLMs.

Heading up the Safeguards Research Team, his last project had been looking at how AI assistants can "distort our humanity." It all feels pretty Blade Runner as the average reader would be rightly confused about what Sharma was actually working on, although he said: "Moreover, throughout my time here, I've repeatedly seen how hard it is to truly let our values govern our actions.

"I've seen this within myself, within the organisation, where we constantly face pressures to set aside what matters most, and throughout broader society too."

As for what's next, Sharma has suggested he could take up poetry, saying he wants to "contribute in a way that feels fully in my integrity," while also being able to "explore the questions that feel essential to me."

That all sounds pretty vague as he tried to expand on his next adventure, saying: "My intention is to create space to set aside the structures that have held me these past years, and see what might emerge in their absence."

For those still intrigued about Sharma's work at Anthropic, Cornell University has posted the findings of his study into 'distorting' humanity. Sharma claims to have found 'thousands' of incidents where chatbots have distorted our perception of reality on a daily basis.

Referring to 'disempowerment patterns', Sharma maintains that his works “highlight[s] the need for AI systems designed to robustly support human autonomy and flourishing."

Anthropic isn't alone in losing high-ranking safety specialists, with Forbes reminding us that OpenAI has to disband its Superalignment safety research team when two key members handed in their notice.

It seems an increasing number of people are leaving similar positions across the spectrum of companies, as staff continue to cite ethical and safety concerns.