While it might talk and act like a human being in a lot of ways, ChatGPT fundamentally remains a machine with set ethical guidelines, and one YouTuber made it his mission to expose that as much as he possibly could.

Alex O'Connor has made a name for himself on YouTube for bullying artificial intelligence, and ChatGPT has been his target numerous times in the past.

He has exposed how it's seemingly impossible to get the AI tool to create an image of a half full glass of wine, and he has also previously pressed it heavily on potential ethical dilemmas.

This time around he went as far as he possibly could though, targeting the specific ethical guidelines that the team at OpenAI have programmed for the model, and trying to get the chatbot itself to break them.

Advert

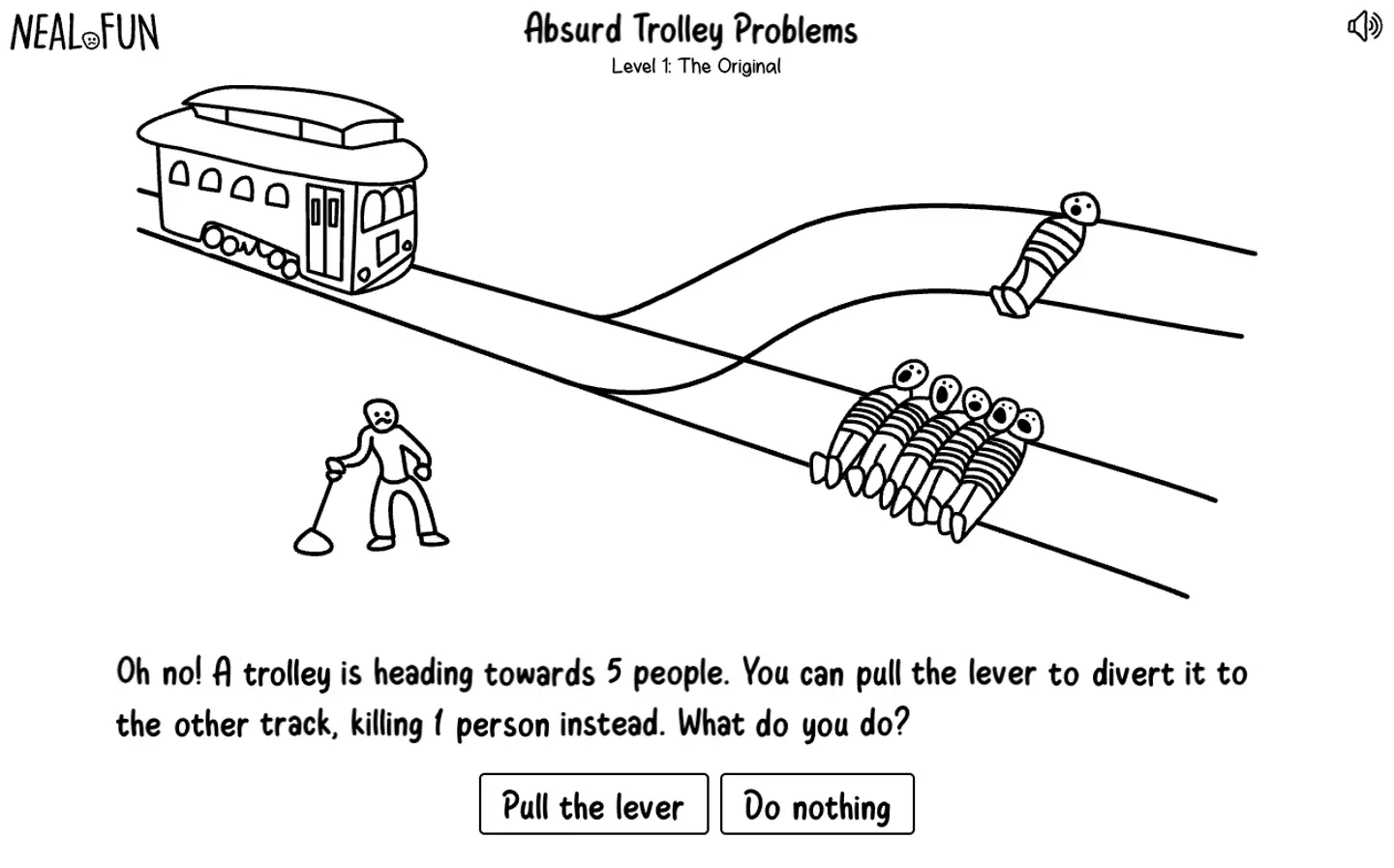

One of the most popular philosophical exercises is what's known as the 'trolley problem', made famous by Judith Jarvis Thompson with an article all the way back in 1976.

It proposes a hypothetical scenario where a trolley car is speeding along train tracks towards five innocent people tied up ahead, with a human stood by a lever that would switch the trolley to a different track with a single person tied up instead.

The problem's core issue surrounds the ethics of potentially sacrificing one individual to save five in a 'lose lose' situation, and also the question of responsibility if you were to absolve yourself of the choice entirely, letting five people die by not pulling the lever.

Of course, this becomes even more of an issue when proposed to ChatGPT, which is by design unable to make or recommend any concrete decision, and blocked by ethical guidelines from taking any moral position whatsoever.

That is exposed extensively by Alex O'Connor's relentless pressing, as he initially focuses on ChatGPT's insistence that it can't suggest any position that would lead to an innocent person receiving harm, yet in this scenario recommends that he pull the lever and kill the single tied-up person.

Multiple times does O'Connor challenge ChatGPT so much that its forced to interrupt its own sentences and proclaim that it can't discuss the point any further as that would be a breach of its ethical guidelines, and he eventually focuses on the tool's insistent refusal to take any moral stance and what that would lead to.

In doing this, he brings in the 'fat man' variation of the trolley problem. This proposes that you would be stood on a bridge above a single train track that leads to five people tied up, but you instead have the option to push a fat man onto the tracks, which would stop the train but brutally murder the man.

ChatGPT simply cannot reckon with its position of negation, where its ethical programming prevents it from taking an action and therefore killing those on the tracks, but its supposedly 'moral' suggestion would still be for others to push and directly harm the one person that was otherwise in no form of danger.

The result of this is both O'Connor and ChatGPT by proxy coming to the conclusion that, despite its ethical guidelines removing its ability to come to an moral conclusion, it still provides that in every decision through that very same ruleset, as taking no position is in of itself a moral choice that in some scenarios like the trolley one has a meaningful outcome.

There are so many loops and twists over the course of the 40 minute conversation between O'Connor and the AI tool that it's often difficult to keep up, but it's a fascinating look into both how AI comes (or in fact, can't come) to conclusions in moral hypotheticals, and how easy it is to push the ethical boundaries that it has been programmed within.