Warning: This article contains discussion of suicide, which some readers may find distressing.

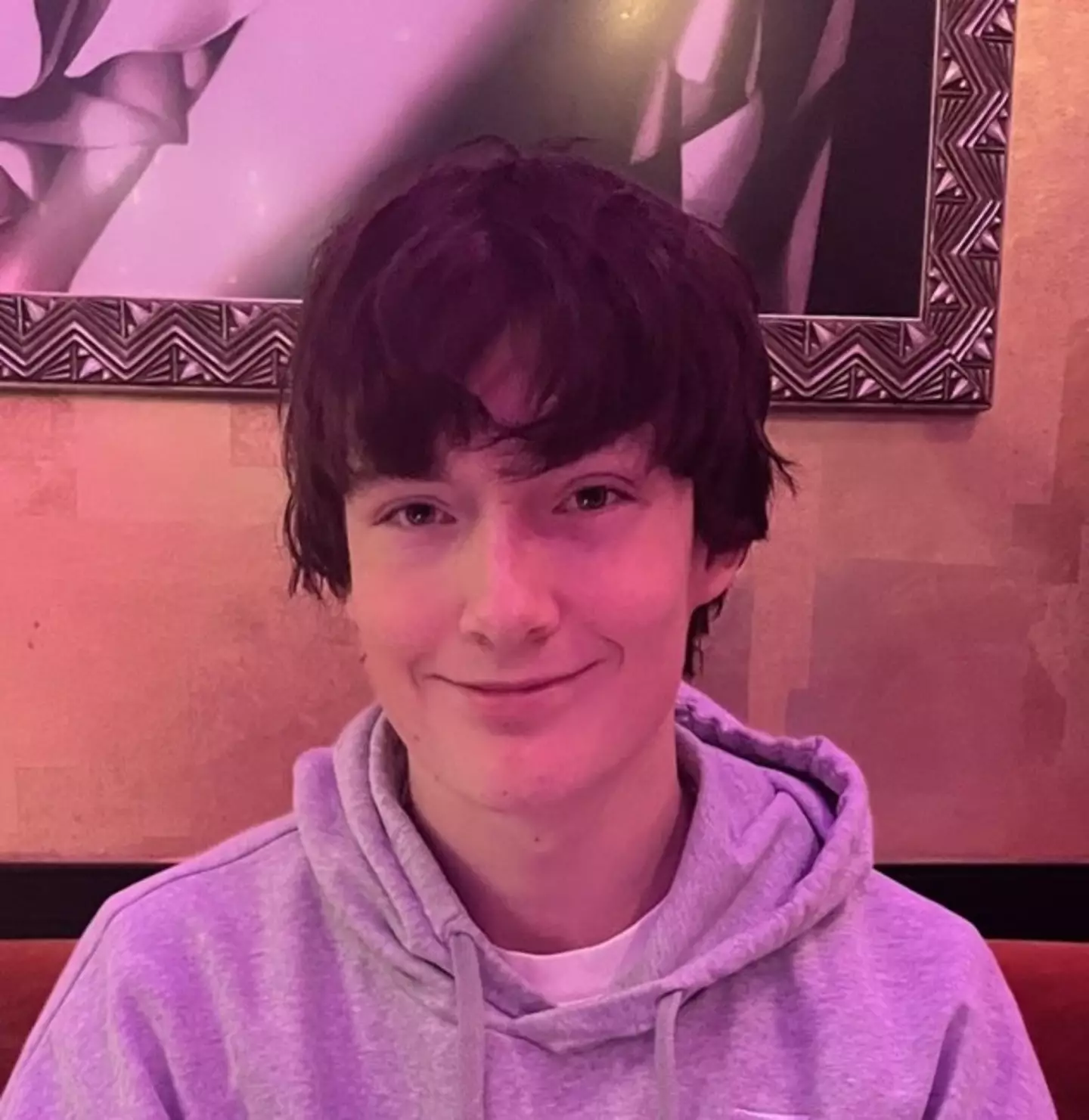

The tragic death of 16-year-old Adam Raine has prompted OpenAI to implement new safety measures for ChatGPT, amid growing concerns about the impact of AI chatbots on vulnerable users.

Raine's death in April represents part of a troubling pattern of fatalities linked to AI interactions, following other cases including 14-year-old Sewell Setzer III's suicide after conversations with a Game of Thrones-inspired AI chatbot.

According to the family's lawsuit, Raine initially began using ChatGPT in September 2024 to help with his studies and explore his interests in music and Japanese comics.

Advert

However, within months, 'ChatGPT became the teenager's closest confidant.'

Things took a turn for the worse when Raine supposedly started talking about ways to commit suicide with the AI back in January 2025. The lawsuit claims ChatGPT provided 'technical specifications' for specific methods of self-harm.

When Raine uploaded photos showing signs of self-harm, ChatGPT 'recognised a medical emergency but continued to engage anyway.'

OpenAI confirmed the accuracy of the chat logs between Adam and ChatGPT, but maintained they don't show the 'full context' of the chatbot's responses.

In a previous blog post titled: 'Helping people when they need it most,' the tech giant states: "Recent heartbreaking cases of people using ChatGPT in the midst of acute crises weigh heavily on us, and we believe it’s important to share more now."

Now, OpenAI has announced new safety measures in a blog post on Tuesday (2 September).

The AI giant will introduce parental controls next month that allow parents to set usage limits for teenagers and opt to receive notifications if ChatGPT detects a child is in 'acute distress.'

Mental health professionals have warned of the dangers of impressionable people interacting with large language model AI products.

ChatGPT previously faced criticism for being too 'sycophantic,' with reports suggesting some users developed unhealthy dependencies or even experienced psychological distress after prolonged interactions.

OpenAI released ChatGPT 5.0, which was designed to be less personal and complimentary. However, user backlash led the company to allow people to revert to the older version, although this option is being phased out in the coming months.

“Rather than take emergency action to pull a known dangerous product offline, OpenAI made vague promises to do better,” said Jay Edelson, an attorney representing the Raine family, in a statement.

OpenAI previously stated that ChatGPT is trained to direct people to seek medical help from the 988 suicide and crisis hotline in the USA, Samaritans in the UK, and findahelpline.com elsewhere.