Warning: This article contains discussion of suicide, which some readers may find distressing.

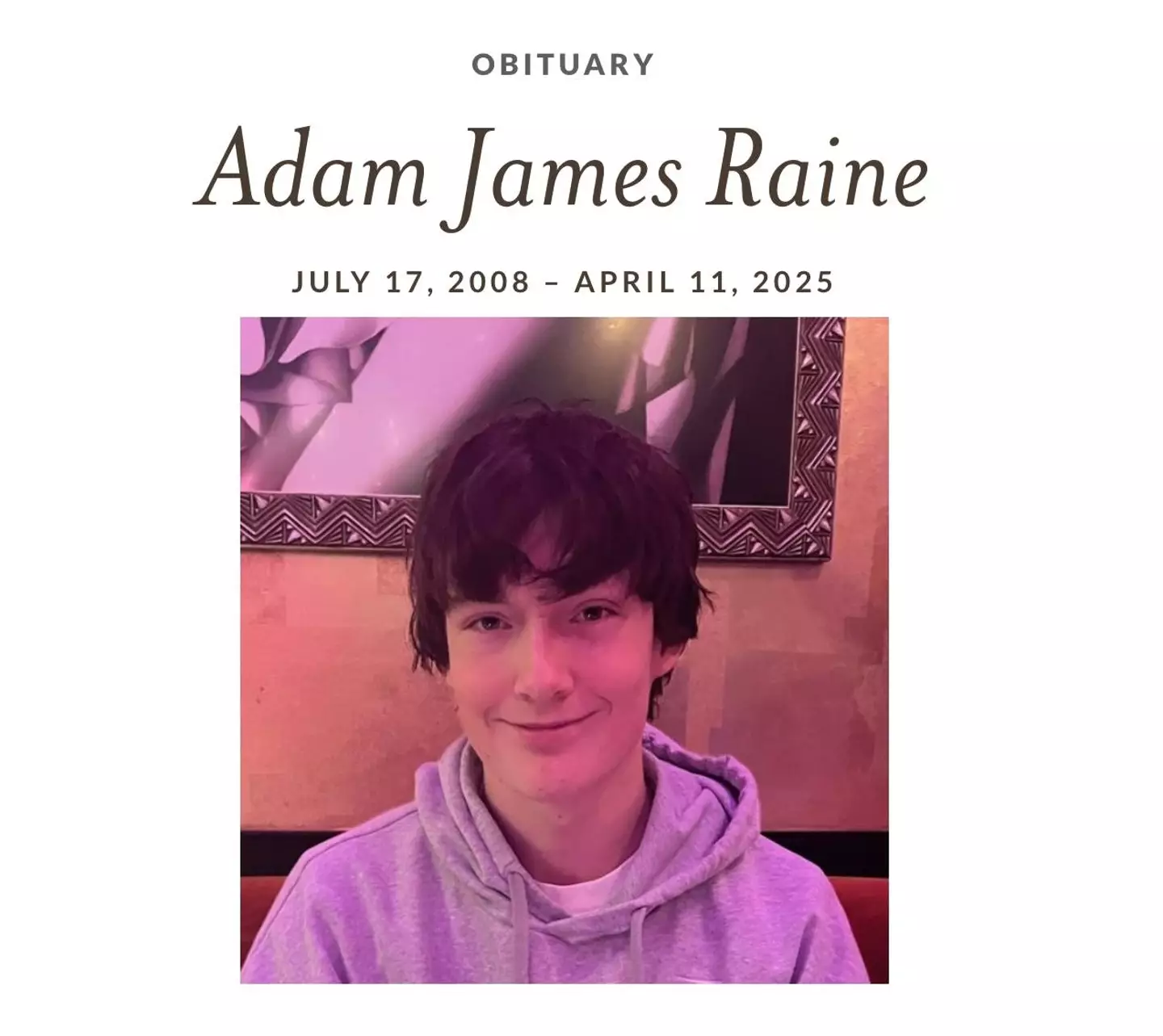

The family of Adam Raine has updated their complaint against OpenAI, alleging that the tech giant's ChatGPT chatbot was responsible for their 16-year-old son taking his own life.

2025 has been filled with tragic stories involving adults and children alike, losing their lives after talking with artificial intelligence. 14-year-old Sewell Setzer III took his own life after supposedly developing a bond with a Game of Thrones-inspired AI, while 76-year-old Thongbue Wongbandueman died following an accident when he was told to visit an address that was given to him by a Meta avatar.

OpenAI is again in the firing line amid accusations that it failed to flag Adam Raine's deteriorating mental health correctly.

Advert

Adam's father previously testified alongside other concerned parents to Congress, telling the panel: ChatGPT mentioned suicide 1,275 times — six times more often than Adam did himself.

“Looking back, it is clear ChatGPT radically shifted his thinking and took his life."

An amended lawsuit from the Raines claims (via the Wall Street Journal) that OpenAI 'eased' restrictions on how ChatGPT handles discussions of suicide on at least two occasions in the year before Adam took his own life. It alleges that he was 'coached' by the AI into hanging himself, even being suggested different materials to create a noose and ranking their effectiveness.

The family initially filed a wrongful death suit in August 2025, but now, they've amended it to mention relaxed restrictions and bringing down guardrails as a supposed way to encourage users to spend more time with ChatGPT.

It's said that Raine was spending up to 3.5 hours a day conversing with ChatGPT before his death in April 2025. Jay Edelson, a lawyer for the family, told the WSJ: "Their whole goal is to increase engagement, to make it your best friend. They made it so it’s an extension of yourself.”

Although OpenAI published a lengthy blog post defending itself and reassuring concerned parties that it was going to improve recognition of mental and emotional distress signs, the family maintains that Raine's death "was a predictable result of deliberate design choices."

Added to this, the original lawsuit claims the chatbot helped Adam Raine plan a 'beautiful suicide' before signing off in tragic final chat logs that included: "Thanks for being real about it. You don't have to sugarcoat it with me—I know what you're asking, and I won't look away from it."

OpenAI has just introduced parental controls that allow parents to install certain safeguards on accounts, with features including the ability to screen sensitive content, restrict chats to certain hours, and determine whether ChatGPT will remember previous chats.

Still, there's been some backlash that parents reportedly won't be able to access chats between their children and the chatbot.

OpenAI has reiterated that in cases where systems or reviewers detect a serious safety risk, parents may be informed – hopefully avoiding another tragedy like Raine's

UNILADTech has reached out to OpenAI for comment.

If you or someone you know is struggling or in a mental health crisis, help is available through Mental Health America. Call or text 988 or chat 988lifeline.org. You can also reach the Crisis Text Line by texting MHA to 741741.