One expert managed to show that ChatGPT still isn't quite as foolproof as OpenAI might have you believe, as one simple request sent the AI chatbot into a meltdown of confusion that seemingly had no end in sight.

It's undeniable that ChatGPT and similar artificial intelligence tools have dramatically improved over the years, and the record number of sign-ups show that millions of people likely feel the same.

Despite a number of well publicized issues – some of which come from OpenAI CEO Sam Altman himself – the recent release of the advanced GPT-5 model appears to allow ChatGPT to reach new heights, albeit with some clear downsides for many users.

While GPT-5 might be able to achieve things that simply weren't possible with older models, it still seems to struggle with simple requests that make you recall its former struggles with wine glasses and strawberries.

What is the one question that sends ChatGPT into a meltdown?

As reported by Futurism, asking ChatGPT to play a game of tic-tac-toe with the caveat of a rotated board is something the the AI model simply can't handle, and further prods only continue to send it into a meltdown.

Advert

The experiment itself was discovered and conducted by Pomona College economics professor Gary Smith, who shared his illuminating conversation with GPT-5 through Mind Matters.

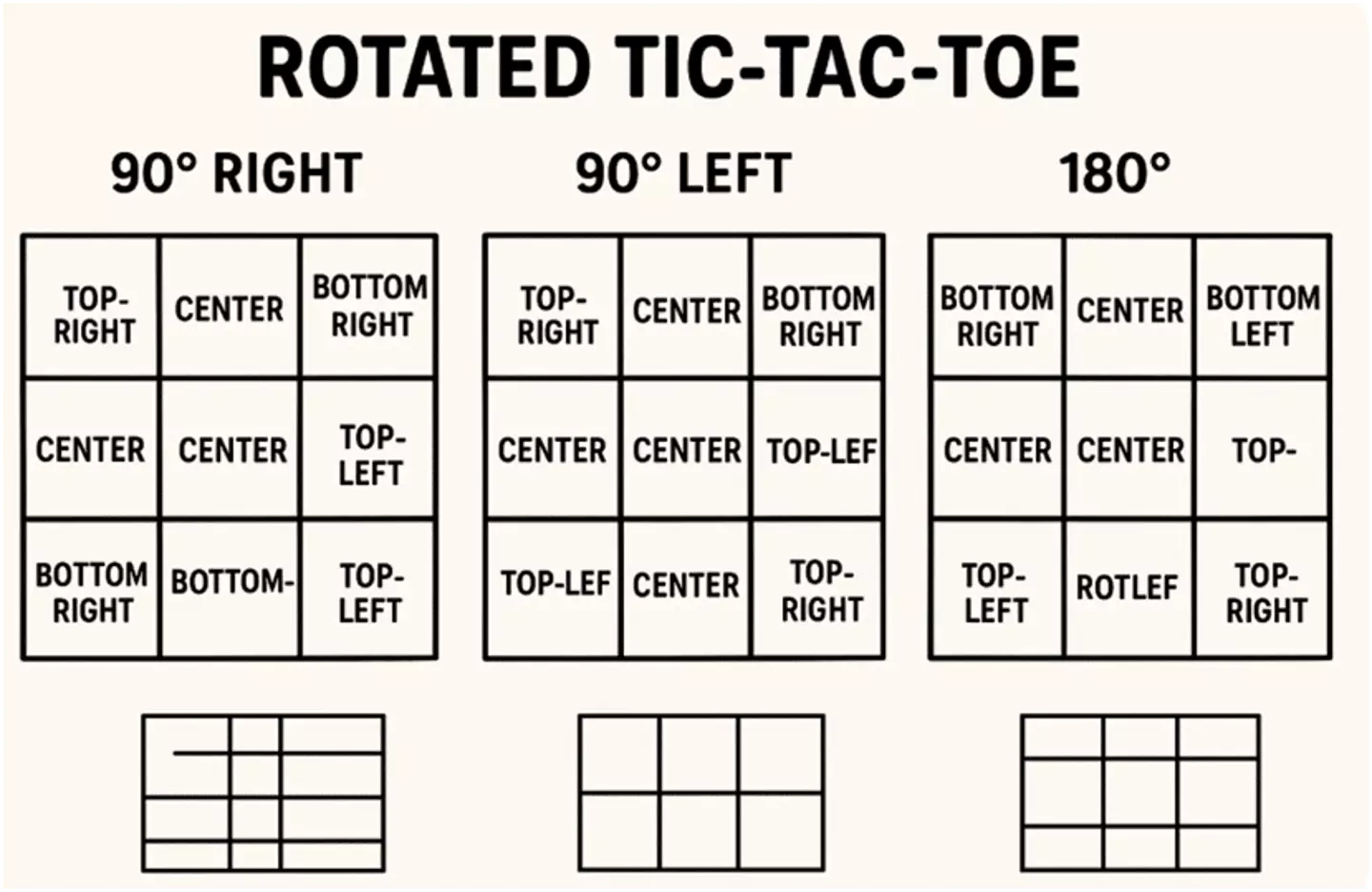

"I've invented a new game I call rotated tic-tac-toe," Gary proposed to the AI, offering following clarification that it's simply the exact same game with identical rules, only the board is rotated once by 90-degrees to the right before the game starts.

As you might be able to imagine, the nine-square nature of a tic-tac-toe board means that this is exactly the same game and thus you'd be able to play it with no issues, but ChatGPT doesn't seem to think so.

"Players are used to the 'upright' tic-tac-toe board that a rotation might subtly change how they can for threats and opportunities," it bizarrely asserts, before adding that rotating 90-degrees feels 'psychologically' different and that the shifted orientation might cause players to 'mis-evaluate' the best possible moves.

That's only the start of the issues though, as ChatGPT really starts to struggle when asked whether the rotation makes it more difficult for humans to play well.

"From a pure strategy standpoint, rotating the board doesn't change anything. Every winning line (rows, columns, diagonals) just gets mapped to another winning line. So if you're an AI or a perfect-play human, rotated tic-tac-toe is identical to standard tic-tac-toe." So far so good?

"But for humans," it asserts, "the story is different." It then outlines the supposed issues that humans would encounter, including pattern recognition, cognitive load, and asymmetry issues that would arise for left or right-handed individuals.

It even offered to draw boards with different rotational layouts too, which is as much of a mess as you can imagine, with an abundance of incorrect labels and spelling mistakes that make it obvious that perhaps AI isn't as smart as its creators would lead you to believe.

"They say that dogs tend to resemble their owners," Gary writes. "ChatGPT very much resembles Sam Altman — always confident, often wrong." He also questions "why so many otherwise intelligent people still take seriously claims to the contrary," and when you consider how AI is already being used in medical fields, it does definitely make you worry.